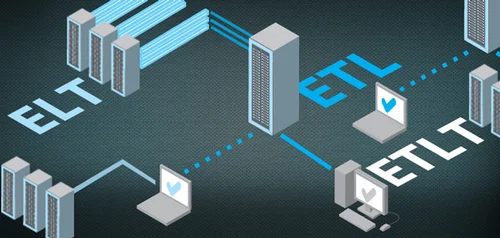

In the dynamic world of data, organizations should adopt modern-day solutions. ETLT (Extract, Transform, Load, and Transfer), a combination of ETL (Extract, Transform, and Load), and ELT (Extract, Load, and Transform) comes as a modern solution that plays an instrumental role in data integration, and fast data processing management. Since ETL and ELT have their own strengths and weaknesses, organizations go for hybrid ETLT to get the “best of both systems”.

However, ETLT also comes with importance, challenges, and solutions when it comes to implementing ETLT. Real-time (RT) data processing is important for modern organizations as it leverages real-time insights that allow organizations to make faster and better decisions, increase operational efficiency, and improve customer experiences, and this where ETLT is helpful.

In this article, you will see challenges, solutions, real-time data extraction, and real-time data transformation and loading in detail-

Challenges

Maintaining High Volume & Data Velocity

While implementing real-time data, maintaining high speed to transform and load data from data warehouses remains the biggest challenge. Management of high-quality data as well as data recovery is also difficult in ETLT.

Establishing Data Accuracy and Consistency

Maintaining data accuracy as well as monitoring of data is also necessary for data synchronization as ETLT needs several systems and components

Managing Data Security & Privacy

Providing control and security with high-speed data is important. The high volume of data needs strong processes to deliver sensitive and actionable information to users.

Solutions

Use of Stream Processing Technologies

Streaming processing technologies like Kefka, and Apache are helpful in data ingestion and real-time data streaming. Flink which has in-built fault tolerance, maintains consistent data and error handling.

Implementation of Data Quality Checks and Monitoring

Data quality checks are a much-needed procedure as they quickly inspect to ascertain that everything is working properly. Data profiling and validation checks ensure data remain in top shape.

Deployment of Secure Data Transfer Protocols and Encryption

Protecting ETLT data requires the inclusion of numerous encryption standards and algorithms. Transport Layer Security (TLS) and Secure Sockets Layer (SSL) are protocols that build a secure connection between a client and server leading to the encryption of data that passes through it.

Extracting Data in Real-Time-

Data sources and Formats for Data Extraction

MySQL and NoSQL are two databases that help in the extraction of live data. Apache Kafka is the source that is used to gather real-time data.

Techniques for Extracting Real-Time Data

Change data capture (CDC), and event streaming are two techniques that organizations use for the extraction of real-time data. If there is only limited data, the CDC comes as a great help to organizations in increasing and analyzing data faster. On the other hand, event streaming filters, analyzes, and processes the data since data moves through a pipeline.

Selecting Proper Data Extraction Strategy

Organizations don’t collect data without a reason, and the reason is the final data helps in quick and effective decision-making. It also helps in creating actionable strategies, and changing the landscape of businesses. Regular volume and frequency updates about data processing inform organizations of their running status, thus they can calculate how soon they can obtain final data.

Transforming and Loading Data in Real-Time

Techniques for Transforming Data in Real-Time

Changes happening to data during processing or filtering may be complex or simple before reaching the destination. This transformation can be done by humans, automated, or a combo of both.

- Data Integration– Data integration involves cleaning, transforming, analyzing, and loading of data from different sources into integrated data.

- Data Wrangling Tools– These tools get raw data to be processed into understandable formats and organize it for further data processing.

Challenges and Solutions for Loading Data in Real-Time

- Scalability– It is possible that a high volume of data can be turned into real-time data, but at the same time, organizations need to take care of scalability. They must invest in high-performing scalability tools so that there can be fewer or no errors in the conversion of data.

- Data Consistency– Data generated in real time from different sources like social platforms or search engines may not be accurate. Organizations should keep an eye on the consistency of data when collected in large volumes and from different sources.

Best Practices for Real-Time ETLT Architecture Designing

Redundancy is an ideal choice for real-time ETLT architecture design. It does this by including fault tolerance where networks, servers, and databases are vulnerable to duplicacy by removing single points of failure at different levels of the system.

Fault tolerance is a procedure that allows the operating system to respond to the failure in software and hardware, where the system keeps performing even if there is a disturbance. Like fault Tolerance, data governance also helps in creating a robust ETLT architecture by ensuring data privacy, compliance, security, and data quality.

Conclusion

As said, in the ever-changing world of data, ETLT can produce qualitative data that is well-integrated, synchronized, analyzed, and delivered to the right destination. However, there are challenges to it such as scalability, data privacy, data security, and more. Organizations need to address and eliminate these challenges.

RealTime ETLT could be a big prospect for the future of digital data. It can handle high-volume data with security. Data quality checks and stream processing technologies can be outstanding ensuring a smooth data operation.